My Design Journey

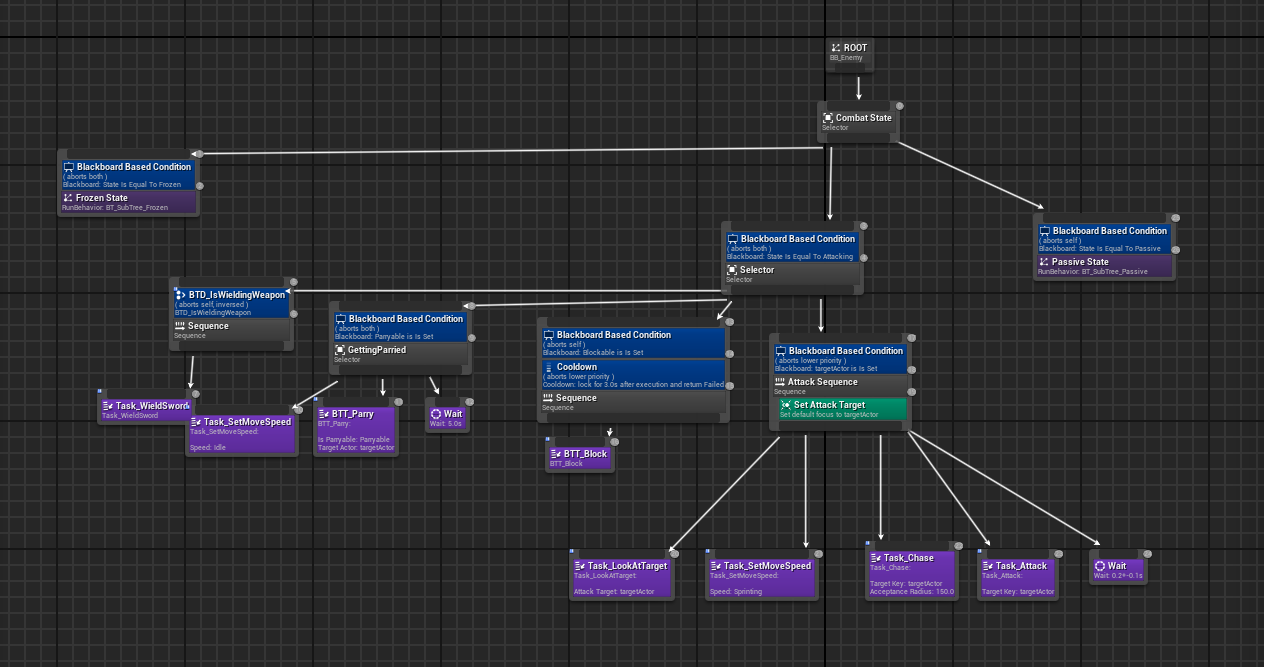

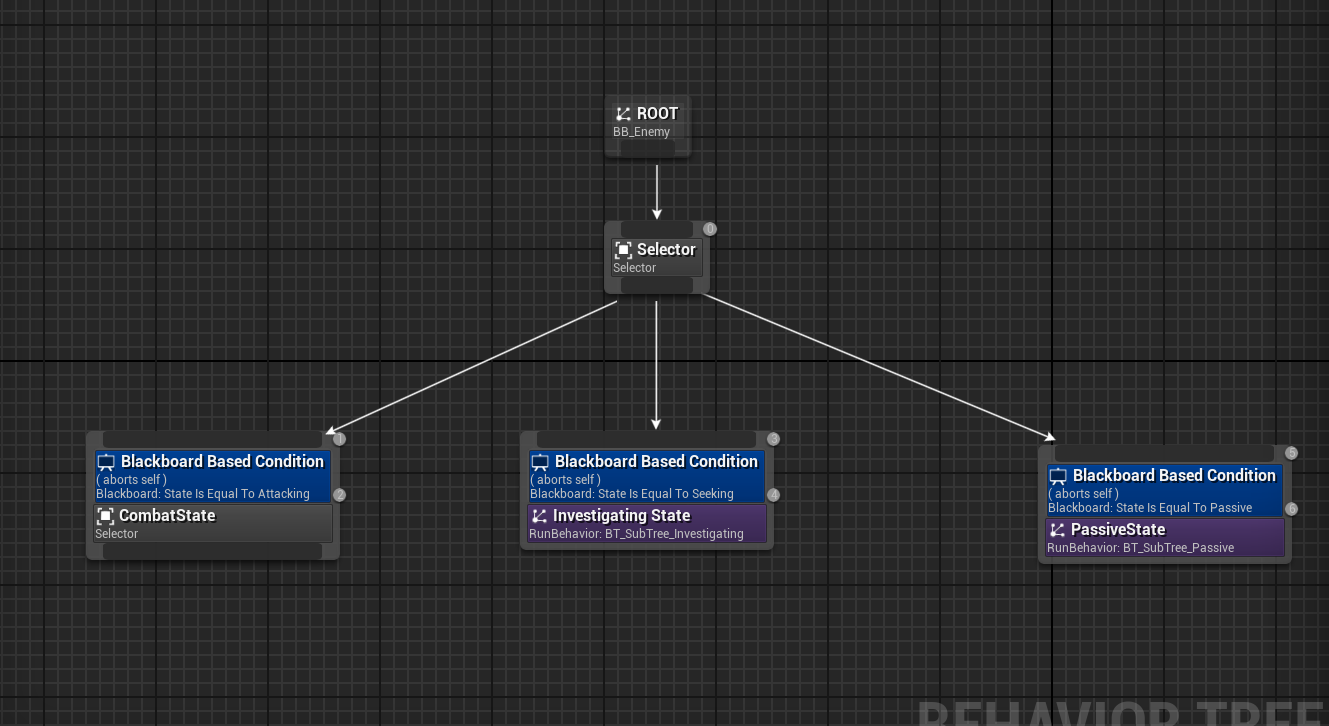

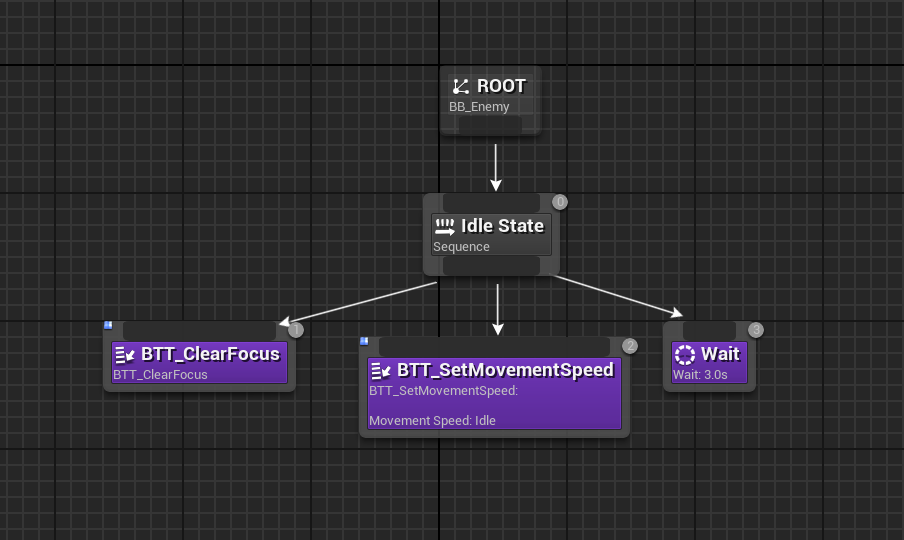

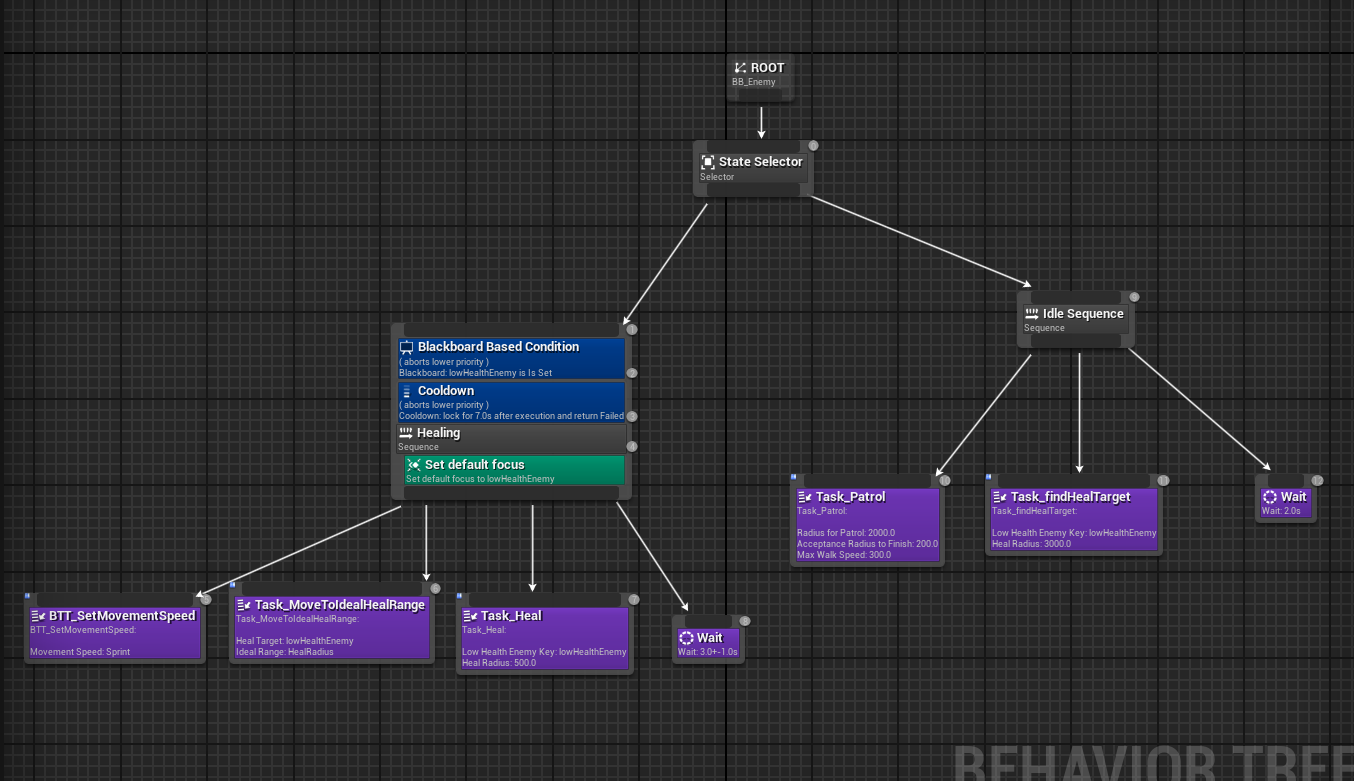

Creating Blood and Sand's AI combat system was about solving a core design challenge: How do you make AI enemies that feel intelligent, distinct, and fun to fight? My approach focused on giving each enemy archetype a unique personality through their combat behaviors, creating a rock-paper-scissors dynamic that keeps players engaged and thinking tactically.

My Core Design Philosophy

Distinct Personalities

Each enemy needed to feel like a different opponent, not just a stat variation

Tactical Variety

Players should need different strategies for each enemy type

Group Dynamics

Enemies should complement each other when fighting together

Emotional Impact

Combat should feel visceral, strategic, and rewarding

The 3.5km combat arena where all AI behaviors come together

Multi-enemy Encounter

Tactical Positioning

Dynamic Combat Flow

Player Character